How to create an app in screenpipe

4 min read

typescriptaitutorialscreenpipeproductivity

how to create a pipe in screenpipe: a complete guide #tutorial #ai #typescript

screenpipe's pipe system lets you build powerful plugins that interact with captured screen and audio data. let's create a simple pipe that uses ollama to analyze your screen activity.

prerequisites

- screenpipe installed and running

- bun installed

- ollama installed with a model (we'll use

deepseek-r1:1.5b)

1. create your pipe

first, let's create a new pipe using the official CLI:

bunx @screenpipe/create-pipe@latestwhen prompted:

- name your pipe (e.g., "my-activity-analyzer")

- choose a directory

2. project structure

now open your fav editor like cursor or vscode and open the project.

cursor my-activity-analyzerafter creation, your project should look like this:

my-activity-analyzer/

├── README.md

├── package.json

├── pipe.json

├── public

├── src

│ ├── app

│ ├── components

│ ├── hooks

│ └── lib

├── tailwind.config.ts

└── tsconfig.json

let's remove some files that are not needed:

rm -rf src/app/api/intelligence src/components/obsidian-settings.tsx src/components/file-suggest-textarea.tsx3. implement a cron job that will be used to analyze activity

create a new file src/app/api/analyze/route.ts and add this code:

import { NextResponse } from "next/server";

import { pipe } from "@screenpipe/js";

import { streamText } from "ai";

import { ollama } from "ollama-ai-provider";

export async function POST(request: Request) {

try {

const { messages, model } = await request.json();

console.log("model:", model);

// query last 5 minutes of activity

const fiveMinutesAgo = new Date(Date.now() - 5 * 60 * 1000).toISOString();

const results = await pipe.queryScreenpipe({

startTime: fiveMinutesAgo,

limit: 10,

contentType: "all",

});

// setup ollama with selected model

const provider = ollama(model);

const result = streamText({

model: provider,

messages: [

...messages,

{

role: "user",

content: `analyze this activity data and summarize what i've been doing: ${JSON.stringify(

results

)}`,

},

],

});

return result.toDataStreamResponse();

} catch (error) {

console.error("error:", error);

return NextResponse.json(

{ error: "failed to analyze activity" },

{ status: 500 }

);

}

}

4. create a simple vercel-like config, pipe.json for scheduling:

{

"crons": [

{

"path": "/api/analyze",

"schedule": "*/5 * * * *"

}

]

}5. update the main page to display the analysis

open src/app/page.tsx and add this code:

"use client";

import { useState } from "react";

import { Button } from "@/components/ui/button";

import { OllamaModelsList } from "@/components/ollama-models-list";

import { Label } from "@/components/ui/label";

import { useChat } from "ai/react";

export default function Home() {

const [selectedModel, setSelectedModel] = useState("deepseek-r1:1.5b");

const { messages, input, handleInputChange, handleSubmit } = useChat({

body: {

model: selectedModel,

},

api: "/api/analyze",

});

return (

<main className="p-4 max-w-2xl mx-auto space-y-4">

<div className="space-y-2">

<Label htmlFor="model">ollama model</Label>

<OllamaModelsList

defaultValue={selectedModel}

onChange={setSelectedModel}

/>

</div>

<div>

{messages.map((message) => (

<div key={message.id}>

<div>{message.role === "user" ? "User: " : "AI: "}</div>

<div>{message.content}</div>

</div>

))}

<form onSubmit={handleSubmit} className="flex gap-2">

<input

value={input}

onChange={handleInputChange}

placeholder="Type a message..."

className="w-full"

/>

<Button type="submit">Send</Button>

</form>

</div>

</main>

);

}

6. test locally

run your pipe:

bun i

bun devvisit http://localhost:3000 to test your pipe.

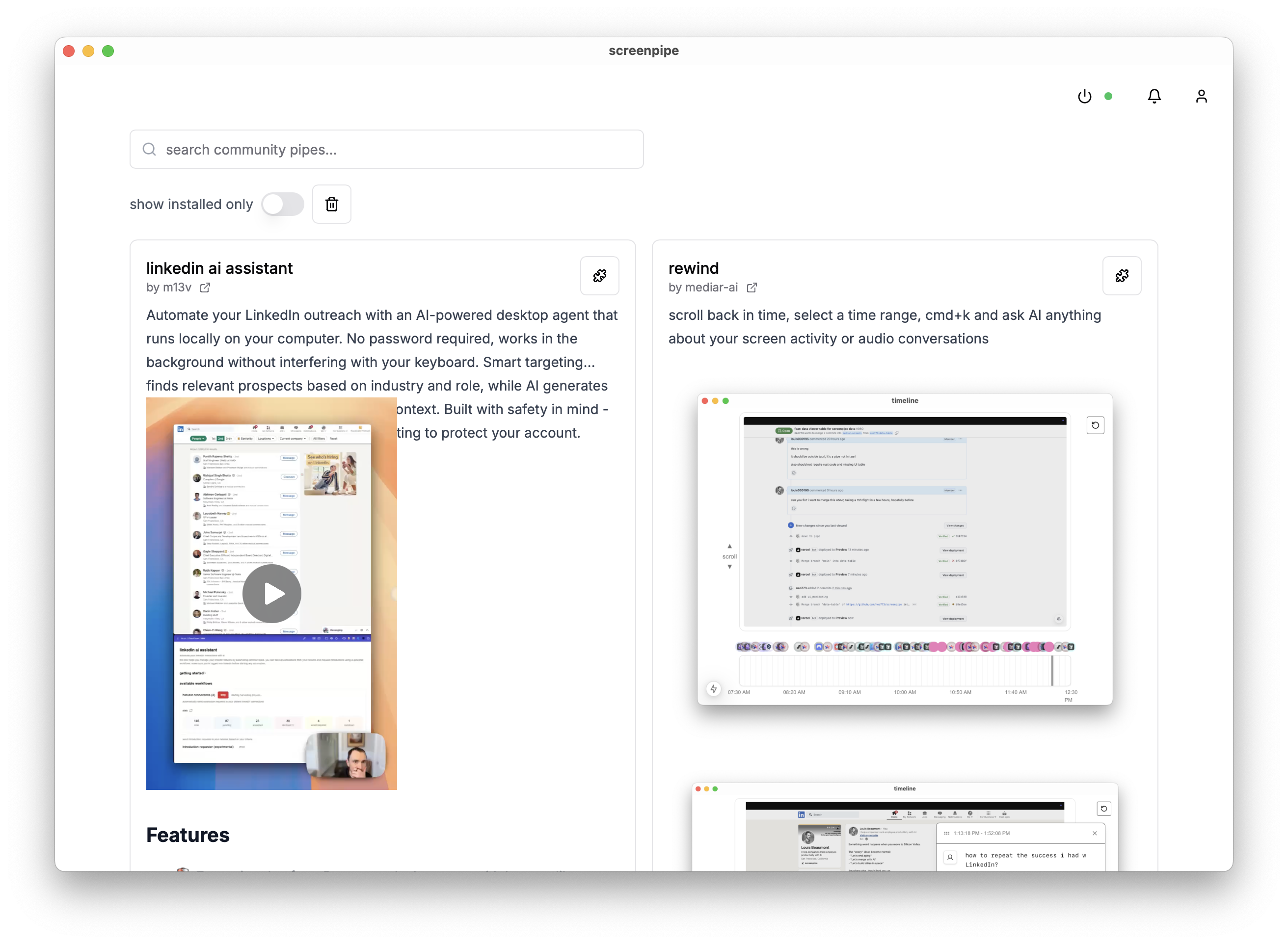

7. install in screenpipe

you can install your pipe in two ways:

a) through the UI:

- open screenpipe app

- go to pipes

- click "+" and enter your pipe's local path

b) using CLI:

screenpipe install /path/to/my-activity-analyzer

screenpipe enable my-activity-analyzerhow it works

let's break down the key parts:

- data querying: we use

pipe.queryScreenpipe()to fetch recent screen/audio data - ai processing: ollama analyzes the data through a simple prompt

- ui: a simple button triggers the analysis and displays results

- scheduling: screenpipe will call the cron job every 5 minutes

next steps

you can enhance your pipe by:

- adding configuration options

- connecting to external services

- adding more sophisticated UI components